Intel Xeon Fpga Coprocessor

Intel mentioned it will be integrating an FPGA coprocessor into Xeon CPUs at the GigaOm Structure cloud computing conference last week. Is this another wave of the future or a turbocharged reboot of Intel’s Stellarton hype orchestrated by Intel, Altera and Microsoft?

Here’s a guess based on my experience. It should be a good guess too since I have developed multiple FPGA coprocessor applications and even co-authored a few patents on the practice that have since been granted to Lockheed Martin Corporation.

First, a little background.

What is an FPGA?

An FPGA (Field Programmable Gate Array) is a specialized microchip that can be “rewired” via a special program to implement any custom computer logic in hardware. This customized “hardware” can then be used to accelerate compute intensive tasks such as video, image and signal processing up to 4000% in some cases! Or, so the story goes.

Traditionally, FPGAs have been used by electrical and computer engineers (mostly hardware guys and girls) to create semi-custom microchips capable of consolidating a number of disparate interfaces, glue logic and maybe a little signal processing into a single chip on printed circuit boards.

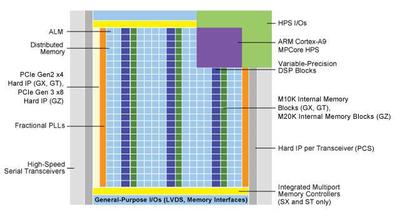

In the last decade however, FPGA companies like Altera and Xilinx saw ever increasing amounts of chip real-estate afforded to them by Moore’s law as an opportunity to add more complex “building blocks” to their FPGAs. These programmable building blocks include memories, multipliers, floating point units, and even CPUs making FPGAs better suited to certain signal processing tasks than just CPUs alone.

Altera’s latest Stratix 10 FPGA, for example, boasts up to 10 Teraflops (single precision) and a 64-bit ARM Cortex A53 quadcore processor among other computing resources. It’s fairly efficient too delivering 100 Gigaflops / Watt. That’s a Teraflop for just 10 Watts.

Is History Repeating Itself?

About eight years ago I was doing R&D that used FPGA coprocessors towards a very similar goal of accelerating compute intensive tasks for U.S. Navy ships and unmanned underwater vehicles (UUV).

I would have been very excited to hear news like this back then. In fact, my R&D co-workers and I used to get excited at the very idea of a company putting an FPGA coprocessor on a PC or server motherboard let alone in the same socket as a Xeon chip with fast access to its massive cache and main memory.

Now, I know better.

Reportedly Intel is doing this in an attempt to please and retain enterprise customers like eBay and Facebook who have recently been looking towards ARM and AMD processors to scale their massive IT cloud computing infrastructure more efficiently.

Plus, the guys at Facebook and eBay are big geeks (like I am) so I understand them. When nerds have a problem and money to spend they seem to develop a high tech itch to scratch.

This is a familiar story to me because the FPGA R&D project I was working on eight years ago was born from roughly the same problem with Intel’s rate of innovation.

Back then, the division of Lockheed Martin I worked for was looking to IBM and AMD for more compute power & efficiency because Intel performance was stagnating.

Each new Intel processor was only marginally faster yet consumed disproportionately more power. AMD chips and IBM’s G4 PowerPC were not much better, so a search for an answer to the problem continued.

Someone did a back of the envelope calculation on FPGAs and came up with a potential speedup of 50-100x over Intel, AMD and PowerPC and a 4 year R&D was born.

In that 4 years our greatest performance achievement was implementing a 2-D spectral beam-former in an FPGA that was 20x faster and about one fifth the power consumption of a high end G4 CPU. Despite our success, the FPGA implementation failed to gain traction with the customer due to various cost factors.

In the end our research became somewhat moot when Intel finally addressed performance issues with their chips in subsequent generations. By shrinking their process technology, adding more cores and tweaking their architecture Intel edged out most of the competition until recently.

What’s Different Now?

There are some differences now from the last time I witnessed the “power-wall” phenomenon that makes this news from Intel notable.

First, the cloud is expanding rapidly and cloud computing applications scale repetitive computation tasks many multiples greater than most military and aerospace applications. The 2-40x speedup Microsoft is claiming and even the 10x speedup Intel is claiming for specific tasks could greatly reduce recurring costs on power and cooling at hyper scale data centers (at least on paper anyway).

Second, big companies like Intel and Microsoft are touting the potential of FPGA technology and even claim to be seeing actual performance boosts in mainstream data center applications like search engines according to this article by Wired Magazine. Intel will be manufacturing Altera’s latest Stratix 10 FPGA using their 14nm process as and has actually already packaged an Altera FPGA with an atom processor a few years ago as well.

But, why now when they were able to get by for so many years without FPGAs? The answer is money. Intel stands to make a ton of it and Microsoft thinks they are going to save some.

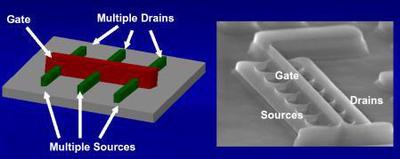

It could also be, and I know we have been hearing this for years, Moore’s law IS really coming to an end. Really, it is. We may not see a process technology capable of going smaller than Intel’s current state-of-the-art TriGate process any time soon.

This latest chip manufacturing process took Intel 9 YEARS to get into mass production. This process already went from 22nm to 14nm recently, but at 14nm a gate is about 100 atoms wide and the dielectric is only a few atoms thick! It will soon be physically impossible to shrink transistors any more and getting gates below 5nm poses very significant challenges.

And, I believe shifts in Intel’s business model, such as manufacturing devices for more companies like Altera and even would-be competitors like ARM using their own 14nm process, further signals the demise of Moore’s law.

Finally, the most important difference this time around is there is a standard framework OpenCL (Open Computing Language) that is gaining traction and being adopted by many big tech companies including Apple, Intel, Qualcomm, Advanced Micro Devices (AMD), Nvidia, Altera, Samsung, Vivante, Imagination Technologies and ARM Holdings.

Initially developed by Apple to make development of heterogeneous computing systems involving GPUs easier, OpenCL is a C like language that can now be used to model and implement complex system communications between CPUs, GPUs, FPGAs and memory.

Companies like Altera are also now using OpenCL to implement FPGA designs at a much higher level. OpenCL may allow software focused engineers to implement complex FPGA designs at an algorithmic level with less experience, specialized training, and knowledge of the underlying hardware.

Traditionally FPGAs have been developed at the register level using a hardware description language (HDL) by hardware focused engineers engaged in a very tedious process using many of the same tools and processes of a regular microchip designer.

Interesting Side Note

Apple has recently patented the OpenCL standard which is supposed to be open.

This is interesting to me because the patents I worked on were supposed to protect Lockheed Martin’s framework for heterogeneous computing long before Apple published their patents. I wonder if Lockheed will demand royalties from Apple? I doubt it, but I glanced at Apple’s patents and the work is very similar. I think these Apple patents are more defensive in nature so that the company can protect a standard that is supposed to be open, from future patent trolls despite the existence of prior art.

Some Things Never Change

I know I just made a great case for Intel’s FPGA coprocessor effort, but I am actually very skeptical the technology will catch on even in “hyper-scale” data centers at this point in time.

I think the news from Intel and any subsequent buzz in the tech world echo chamber in the near term is only a stepping stone to another point 5, 10 or even 15 years down the road. The reason I think that way is because some things never change.

First, high end FPGA hardware is expensive. It’s not like Xeon E5 chips are cheap either. So, what do you get when you marry a $3,500 E5 Xeon and, lets say, a middle of the road $5000 Altera Stratix V FPGA? You get a $10k – $15k monster chip with an FPGA that doesn’t necessarily do anything yet. Cost scales at the data center before performance can be verified at the same scale. The high price is a function of supply and demand and this will not change until there is a much bigger demand for FPGA technology in the mainstream.

An FPGA Board Like This Turns a $5k Server into a $20k Server

But, its very unlikely there will ever be a huge demand for FPGA technology in the mainstream. Even the scale of cloud computing isn’t enough. There will always be applications that are a good fit for FPGAs for sure, but that number is way smaller than most people think.

It takes a lot of knowledge and years of experience to get a feel for what parts of applications are a good fit for the FPGA and will benefit enough to justify the added expenses. It’s a complex trade space so lots of smart people make the mistake of believing FPGA marketing hype and their own back of the envelope calculations that aren’t backed by enough real world experience with the technology.

Take the case of Microsoft in the Wired article again for example. Microsoft’s Doug Berger said that the FPGAs are 40 times faster at processing Bing’s custom algorithms, yet after prototyping Microsoft now believes they would only see a 2x speedup in their datacenter. What happened? It’s harder to extract the computing power from FPGAs than most people realize.

Even a 2x speedup seems great though because now they can cut their number of serves in half and “run a much greener data center” they say. That’s one way to look at it I guess, but the bean counters are going to realize that after paying additional salaries and spending all the additional money on expensive hardware that it will be time to upgrade the servers before they recoup that money on power and cooling expenses in their green data center.

Finally, the support for OpenCL in industry seems great, but in the FPGA and heterogeneous computing space, it is just another C-like language and framework, like many before it, that promised to allow software and system folks to design and integrate FPGA accelerated hardware, but fell short. Look at Celoxica, SystemC, SystemVerilog and many others. They may still have industry support, but that doesn’t change the fact that relatively few people need to use them because the number and type of tasks that benefit from FPGA acceleration is still relatively small. And, getting a C like language to synthesize efficient digital hardware from high level descriptions without knowledge of the underlying hardware is very difficult to do. Even AMD has its doubts about OpenCL.

The Bottom Line

I don’t think FPGA coprocessors in CPUs will see any real demand until maybe Moore’s law finally dies and investments in technology become much longer term. I think process technology will continue to shrink for a while and get smaller than most people expect.

And, if tools to synthesize digital FPGA designs from high level languages aren’t much better by then it will still be quicker, cheaper and easier to do something clever like move your data center to Norway where the air is cold and there is an abundance of free geothermal energy, or take servers out of their cases to improve cooling efficiency by 2x, or rack up a bunch game consoles like Xbox One’s or PlayStation 4s!

For now, I think Intel is just trying to give customers what they want because the customer is always right and in this case Intel probably stands to make a small fortune on these data center experiments.

Also, I think Intel is doing its best to retain customers while it comes up with its response to AMD’s wildly successful heterogeneous system architecture (HSA) that has wrapped up all of the Xbox One and PlayStation 4 business and now is beginning to threaten Intel’s business again.

The threat from ARM is different with their huge amount of pull in the mobile space which is driven by extremely low power and high performance requirements and delivered in volume by highly customized system-on-a-chip (SoC) solutions.

I would love to see FPGA coprocessors be part of Intel’s actual response to AMDs HSA and ARM’s agility with SoC. But, I don’t think FPGAs will constitute a big part of that response and will ultimately fail to make a significant impact in big datacenters near term.